There are two reasons for this situation; one is reviewing and the other is charging.

In traditional publishing the established way of controlling quality is the reviewing process. Before anything is published in a journal, newspaper or book it will have been reviewed by at least one person, and maybe two or three. If an author wants their work to be published they have to take account of the reviewer’s comments. The process is not perfect, but it works pretty well.

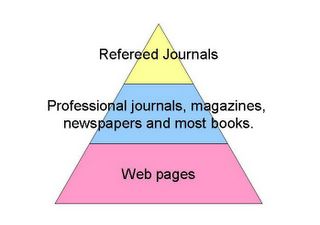

There is a quality hierarchy in publishing. At the top are the refereed journals, mainly written by academics. The content of these will have been reviewed by an editor and by between one and three referees. The referees should be people who are known to be knowledgeable about the subject of the article being reviewed.

There is a quality hierarchy in publishing. At the top are the refereed journals, mainly written by academics. The content of these will have been reviewed by an editor and by between one and three referees. The referees should be people who are known to be knowledgeable about the subject of the article being reviewed. At the next level down are professional journals, magazines, newspapers and most books. The content of these will have been reviewed by a editor. The quality threshold is less here because the editor may not know anything about the subject of the article. However, they will be able to correct bad writing.

At the bottom of the pyramid are web pages. No permission is required to publish and that means that usually only the author has looked at a web page [including weblogs ] before it appears on the web. Of course there are advantages in imprimatur free publishing, but the cost is often quality.

The second factor that affects the quality of web pages is the lack of a charging mechanism on the internet. If authors are paid for their work they have a powerful incentive to produce good work and more of it. The problem is that is no mechanism on the internet that would allow an author to charge a few pence for a look at a well researched and written web page. Other online networks did have such mechanisms. Minitel had its kiosk system. The proprietary networks, such as Compuserve, had ways of charging for content. The internet, because of its academic history and open source nature, does not have such a mechanism. The result is that writers are dependent upon advertising, egotism or altruism for their reward.

Of course, there is a part of the internet where pages are of high quality, the reviewing mechanism works and people get paid for their work. This is the so called Deep Web where publications are behind subscription walls in databases such as Dialog, LexisNexis, IngentaConnect, WofK, ABI/Inform and lots more. Most internet users do not realise these exist because the pages in these systems never appear in Google searches. Google only searches the poor quality Surface Web. It is only relatively recently that Google has started to open a window into the Deep Web with its Google Scholar search engine.

Can we do anything to improve the quality of pages in the Surface Web? Perhaps by trying to introduce a reviewing system for Surface Web pages? I do not think we should try. Free publishing is worth preserving.

Creating a micropayments system for the Surface Web would reduce the dependence on altruism and advertising and give authors an incentive to do quality work. Perhaps it would be possible to link a payment mechanism with RSS feeds. The problem would be coming up with a system that people would accept and use. We have all got too used to getting stuff for free on the internet.

No comments:

Post a Comment